This work from friend and colleague Ivan Poupyrev (Disney Research) may be the most interesting application of 3D printing I have seen yet. This simple but brillant idea opens up a huge range of possible experiments.

Category Archives: Technology

Optical Media – F. Kittler

Here’s another book I enjoyed reading this summer, Friedrich Kittler’s Optical Media. The original German version dates back to 2002, but the English translation did not appear until two or three years ago. I was surprised at just how much the general outlook and some of the content overlaps with my introductory course in digital media. What could I possibly have in common with Kittler, who had a background in German literature? One point of overlap is an interest in Marshall McLuhan’s work. Kittler also seems to have explored information theory to a certain extent though he sometimes understandably shows gaps in his understanding of the scientific basis of media technology. Nonetheless, this work provides valuable perspective on the history of image media technology.

NIME-13 @ KAIST

Last week at NIME-12, Professor Woon Seung Yeo (aka Woony) made the official announcement that Korea will host NIME-13. The conference will be held at the “MIT of Korea”, KAIST (Korea Advanced Institute of Science and Technology) in Daejeon, with an extra day in Seoul for cultural events and club concerts.

I gave a talk at KAIST in 1998 as part of an invited trip to Korea as keynote speaker at the annual meeting of the Korean Cognitive Science Society. KAIST is about one hour from Seoul by express train.

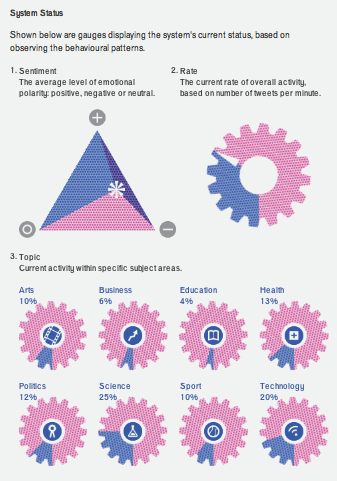

Sonifying Tweets: The Listening Machine

Via: http://www.thelisteningmachine.org/

The Listening Machine

by Daniel Jones and Peter Gregson

The Listening Machine is an automated system that generates a continuous piece of music based on the activity of 500 Twitter users around the United Kingdom. Their conversations, thoughts and feelings are translated into musical patterns in real time, which you can tune in to at any point through any web-connected device.

It is running from May until October 2012 on The Space, the new on-demand digital arts channel from the BBC and Arts Council England. The piece will continue to develop and grow over time, adjusting its responses to social patterns and generating subtly new musical output.

The Listening Machine was created by Daniel Jones, Peter Gregson and Britten Sinfonia.

See also: The Listening Machine Converts 500 People’s Tweets into Music (Wired)

Kugelschwung – Pendulum-based Live Music Sampler

Kugelschwung is the result of a second year Human Computer Interaction project by six Computer Science students at the University of Bristol. These students should be roughly the same age as students in our seminar. The interface is simple but works very well and the concept is brilliant. The work has been accepted for presentation at NIME-12.

Danse Neurale: NeuroSky + Kinect + OpenFrameworks

This performances makes use of the NeuroSky EEG sensor as well as the Kinect. Visuals and music are driven by EEG and registered with the performers body using the Kinect. It seems their system runs under OpenFrameworks. In fact, I noticed this video in the OF gallery. The second half of the video consists of an interview with the technical team and performer.

This performance uses off-the-shelf technology but is cutting edge in more than one sense. No one can accuse these guys of lacking commitment.

A project page may be found here: Danse Neurale.

They generously list the code used to acquire signals from the NeuroSky server in the OF forum. This part of the system is written in P5 (Processing).

Here are a few details on the technical background of the work, given by one of the creators in the OF forum:

Sensors:

– breath: it’s sensed with a wireless mic positioned inside Lukas’ mask. its signal goes directly through a mixer controlled by the audio workstation

– heart: it’s sensed with modified stethoscope connected with a wireless mic; signal works just like the breath (we’re not sure, but in the future we may decide to apply some DSP on it)

– EEG: we use the cheaper sensor from NeuroSky; it streams brainwaves (already splitted into frequencies) via radio in a serial like protocol; these radio packets arrive to my computer where they’re parsed, conveted into OSC and broadcasted via wifi (we only have 2 computers on stage, but the idea is that if we have an affine hacker soul between the public, he/she can join the jam session 🙂 )

– skeleton tracking: it’s obviously done with ofxOpenNI (as you can see in the video we also stage the infamous “calibration pose”, because we wanted to let people understand as much as possible what was going on)

The audio part maps the brainwave data onto volumes and scales, while the visual part uses spikes (originated i.e. by the piercings and by the winch pulling on the hooks) to trigger events; so, conceptually speaking, the wings are a correct representation of Lukas’s neural response and they really lift him off the ground.

StopMotion Recorder

This video is a first effort using the StopMotion Recorder App on the iPhone 4s. Playback frame-rate was set to 4 fps. Images were captured manually an irregular intervals according to the movement of the subject. The ‘Vintage Green’ setting was selected in the App settings. This app is quite easy to use, but by the same token it’s fairly restrictive.

Two more clips with manual, irregular frame acquisition and the same playback rate (4fps):

Elektron Musik Studion 1974 Stockholm

This video offers a glimpse at an earlier era in electronic and computer music production – as well as what it was like to use a computer in the early 1970s. My first experience with computers dates from this around this time. It is interesting to reflect what has and hasn’t changed in the nearly 40 year interim.

Historical Images of New York City

The New York City Municipal Archives has just released a large gallery of historical photographs to the pubilc. A selection of the images was published by The Atlantic. Many offer a fascinating glimpse at NYC under construction. The quality of these images is very impressive, perhaps timeless. I imagine factors contributing to the image quality include:

- Skill of the photographers

- Use of large format film

- Use of a tripod

- Craftsmanly control of exposure, development, and printing process

- etc …

Point cloud painting with the Kinect

This short video by Daniel Franke & Cedric Kiefer is one of the most aesthetically impressive uses of the Microsoft Kinect I have seen yet. Apparently they used three Kinects. Not sure whether the visuals could be rendered in real time because there is clearly interpolation between the 3D views involved in producing this video. Also for real-time use this probably involves programming in C++, or at least Openframeworks. For anyone interested in the Kinect, it’s worth trying to find out more about what went into producing the video. Some links are given:

onformative.com

chopchop.cc

There’s full quality version of the video available online:

daniel-franke.com/unnamed_soundsculpture.mov

And a ‘making-of’ video on Vimeo:

Here is a statement by the artists:

The basic idea of the project is built upon the consideration of creating a moving sculpture from the recorded motion data of a real person. For our work we asked a dancer to visualize a musical piece (Kreukeltape by Machinenfabriek) as closely as possible by movements of her body. She was recorded by three depth cameras (Kinect), in which the intersection of the images was later put together to a three-dimensional volume (3d point cloud), so we were able to use the collected data throughout the further process. The three-dimensional image allowed us a completely free handling of the digital camera, without limitations of the perspective. The camera also reacts to the sound and supports the physical imitation of the musical piece by the performer. She moves to a noise field, where a simple modification of the random seed can consistently create new versions of the video, each offering a different composition of the recorded performance. The multi-dimensionality of the sound sculpture is already contained in every movement of the dancer, as the camera footage allows any imaginable perspective. The body – constant and indefinite at the same time – “bursts” the space already with its mere physicality, creating a first distinction between the self and its environment. Only the body movements create a reference to the otherwise invisible space, much like the dots bounce on the ground to give it a physical dimension. Thus, the sound-dance constellation in the video does not only simulate a purely virtual space. The complex dynamics of the body movements is also strongly self-referential. With the complex quasi-static, inconsistent forms the body is “painting”, a new reality space emerges whose simulated aesthetics goes far beyond numerical codes. Similar to painting, a single point appears to be still very abstract, but the more points are connected to each other, the more complex and concrete the image seems. The more perfect and complex the “alternative worlds” we project (Vilém Flusser) and the closer together their point elements, the more tangible they become. A digital body, consisting of 22 000 points, thus seems so real that it comes to life again.